Two Circles

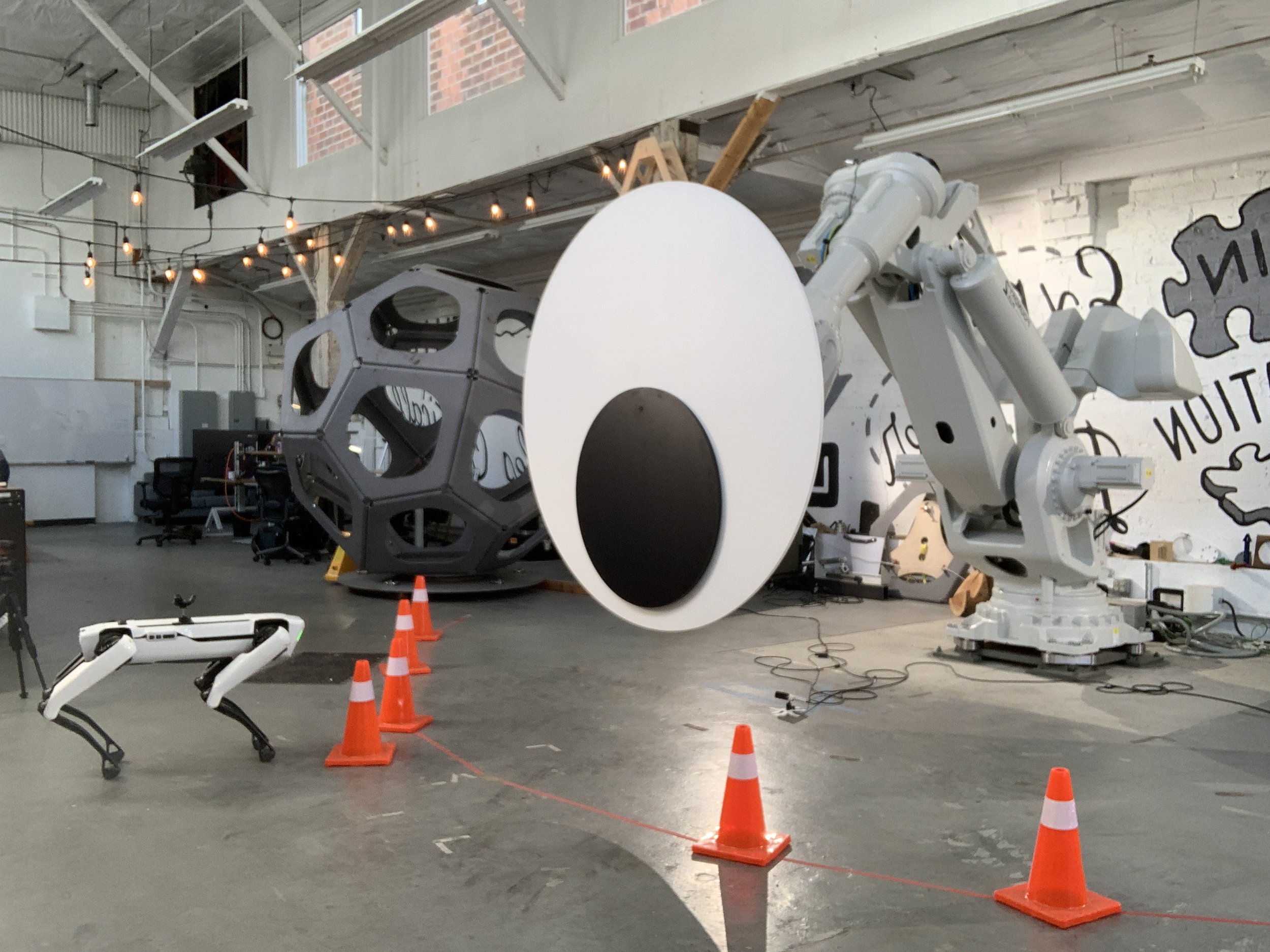

A Giant Googly Eye on a Giant Robot

Two Circles is a giant 2-meter (6.5-feet) diameter googly eye that follows you around a room. It’s attached to a 10,000-pound industrial robot that senses and reacts to your movements. The googly eye will lurch towards you as you move towards it, but will retract away if you try to poke its eyeball. This interactive installation highlights our minds natural tendency to project life and consciousness onto non-living things. Our mammalian instinct towards pareidolia and animism are powerful cognitive shortcuts: they can transform simple compositions — like a 1-meter black circle on top a 2-meter white circle — into quirky, otherworldly creatures as soon as they start to move. As we race to develop increasingly sophisticated robotic and AI systems, striving for human-ness can be limiting. Instead, we should strive for unique, more-than-human experiences that can expand our collective imagination and foster new relationships with these technologies.

A depth sensor positioned on the floor tracks the human body, bringing this rich physical context into the virtual simulation. With precise calibration, the sensor also functions as a touch detector, identifying when someone makes contact the pupil of the googly eye.

Historical Context

Two Circles may be the largest robotic googly eye, but it’s not the first. The arts and social sciences have a rich history of exploring how and why we project life onto non-living things.

The Heider-Simmel Experimental Study of Apparent Behavior (1944) asked participants to describe an video of abstract, geometric shapes moving around the scene. The vast majority of participants instinctively described the shapes as characters — each with their own emotions, motivations, and other social attributes. This study illustrates the power of motion to imbue personality into non-human forms.

Golan Levin’s Double-Taker (Snout) (2008) is a worm-like robot mounted that stares at you as you enter a museum. It used custom real-time tracking and kinematic software to orient its googly-eye towards visitors. Snout’s goal was to perform convincing "double-takes" at its visitors so that it seemed continually surprised by the presence of its own viewers.

Edward Ihnatowicz’s S.A.M. — Sound Activated Mobile (1968) is one of the first pieces of robot art, and it debuted at the pioneering techno-art exhibition, Cybernetic Serendipity in 1968. S.A.M. is a robotic creature that listens and reorients itself towards the most persistent sound source. We can’t help but perceive a “face” in its array of four small microphones and concave sound reflector.

I did my PhD out of Golan Levin’s art-research lab, the STUDIO for Creative Inquiry at Carnegie Mellon University. Because of Snout, we had a family tradition of putting googly eyes on every robot that came through the STUDIO (amongst other things). This googly eye is being used to show off a feature of ofxRobotArm, our open-source software library for doing creative things with robot arms — made in collaboration with Dan Moore at the STUDIO in 2016.

IMPLEMENTATION DETAILS

The software for Two Circles updates the tools I built for Other Natures to run on the giant ABB IRB8700. While the control system is the same, this robot’s kinematic limitations and your spatial relationship to it are quite different.

My creative workflow is based around advanced simulation tools that I developed on top of NVIDIA’s Omniverse Isaac Sim. This simulation-first approach lets me efficiently work through technical hurdles, and then rapidly iterate on the quality and character of the robot’s motions and behaviors — even without access to physical robots. Once on-site, I then fine-tune the robot’s behaviors and personality, taking into account various intangibles that need to be experienced in person — such as the sound of the motors, the atmosphere of the space, or the energy of a crowd.

The robot’s Motion Behaviors are stimulated based on where and how a person is moving. It’s attracted to different features of the body (highlighted in yellow), and follows an agent-based trajectory (highlighted in orange) to achieve life-like movements.

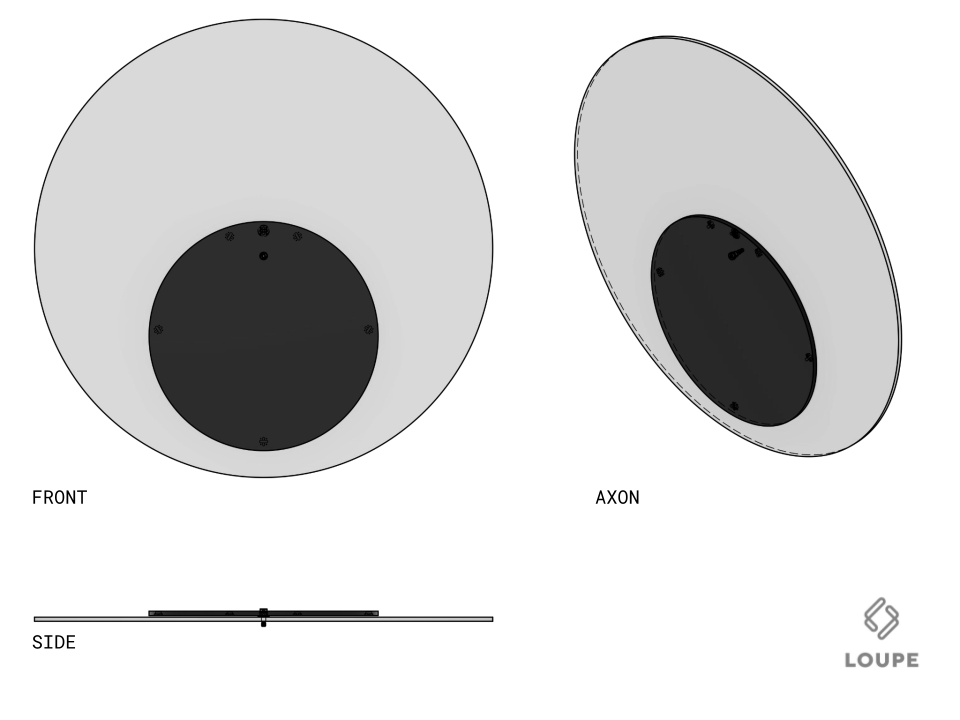

Simulating the googly eye onto the robot helped us dial in the size and scale of the pupil and sclera.

The robot’s Motion Behaviors were developed in NVIDIA’s Omniverse Isaac Sim (left) and then validated in ABB’s hardware emulator, RobotStudio (right). Pre-visualizing and validating our control software before arriving on-site enabled us to get our entire software and sensor stack up-and-running on a brand new robot the very first day.

Physical Assembly

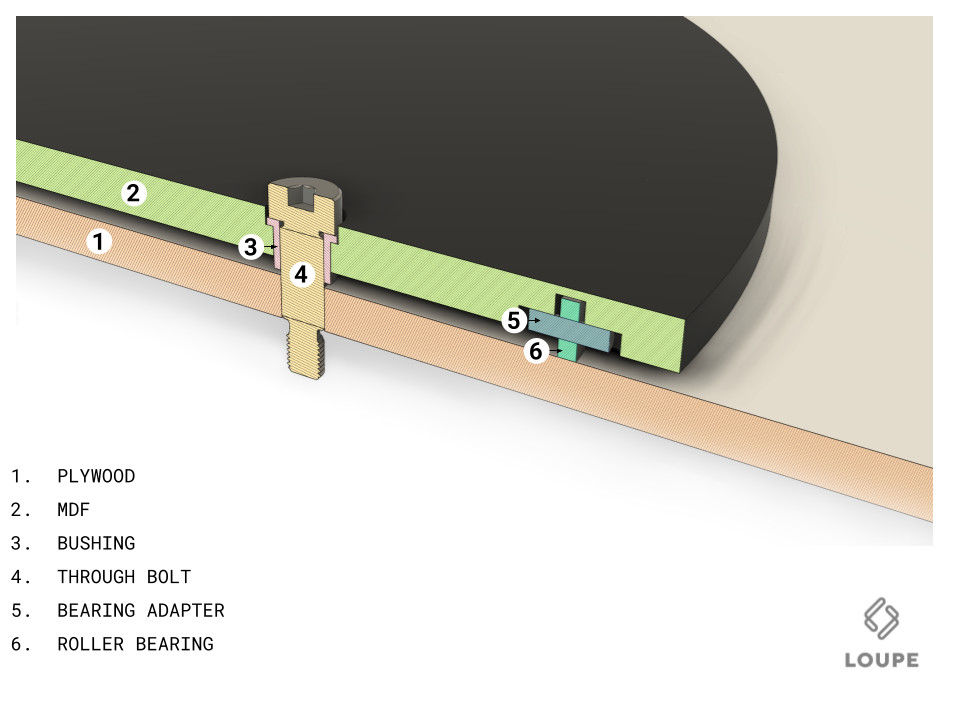

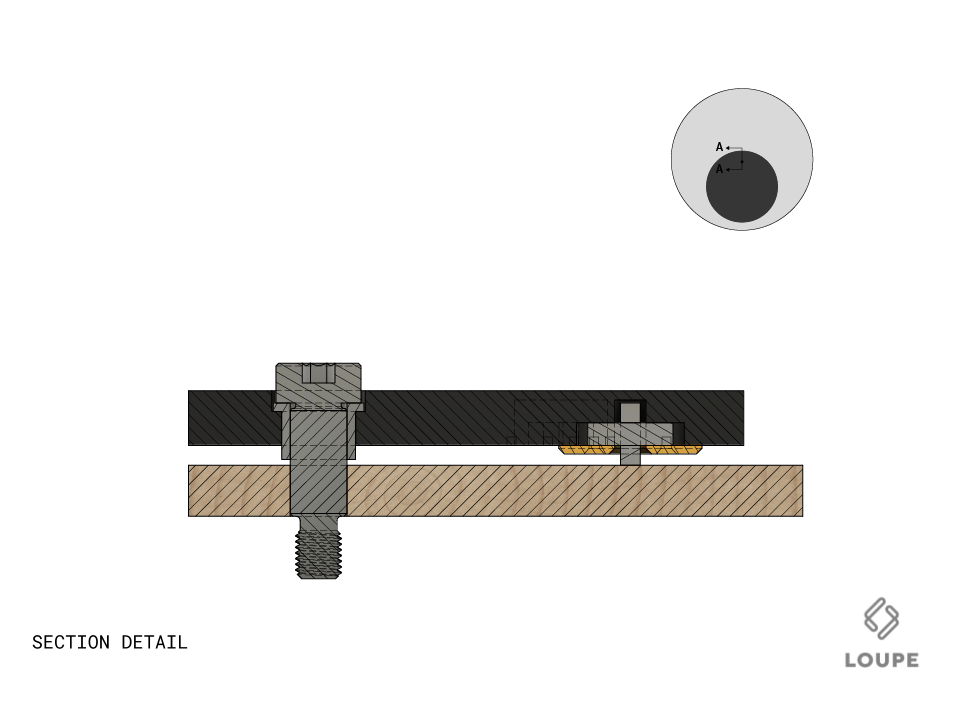

Two Circles’ white sclera is 2-meters (6.5-feet), made of 3/4” birch plywood, and is reinforced with Unistrut. The black pupil measures 1-meter (3-feet) in diameter, and is reinforced with a steel plate around its pivot point. The pivot point is the only place where the pupil connects to the sclera, so we added bearing to the back of the pupil to maintain alignment and minimize friction as it rotates.

Image Gallery

Project Credits

This project was developed as a part of a 1-week Artist Residency at Loupe HQ in Portland, Oregon in March 2023. It is a collaboration between ATONATON x Loupe.

Concept & Software Development: Madeline Gannon

Googly Eye Design & Fabrication: Team Loupe (David Nichols, Jesse Shankle, Shane Reetz, Karl Robrock, Nathan Schenk, Jonathan Schell, and more!)